Re-labeling ImageNet: from Single to Multi-Labels, from Global to Localized Labels

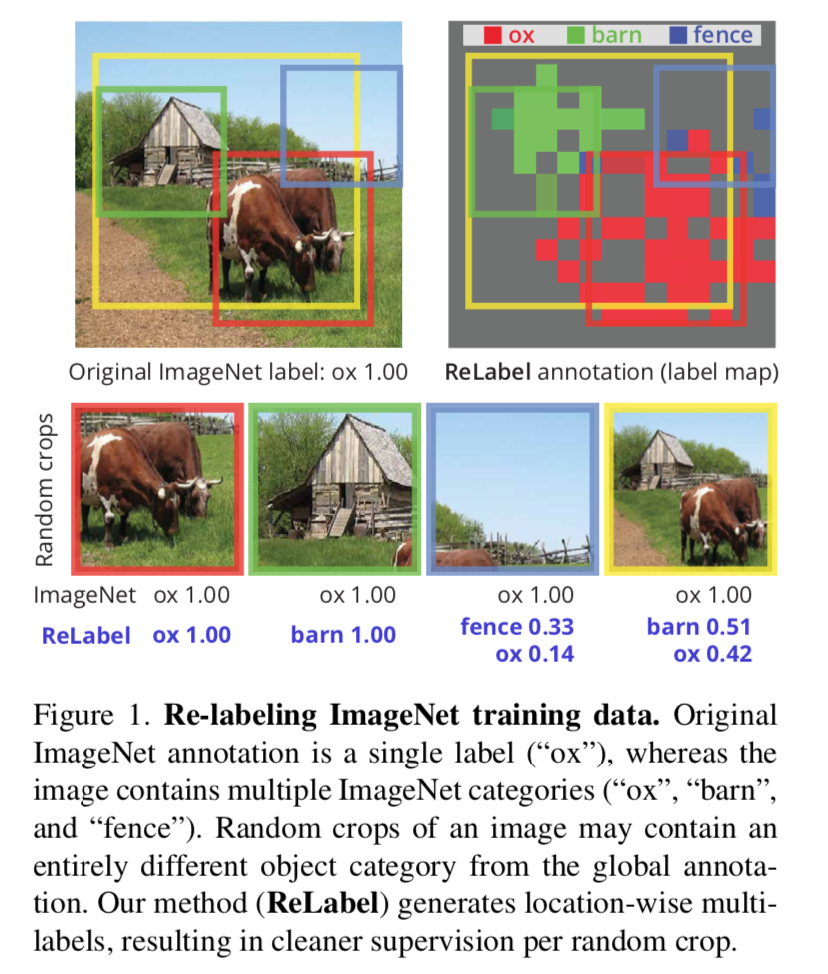

动机

- label noise

- single-label benchmark

- but contains multiple classes in one sample

- a random crop may contain an entirely different object from the gt label

- exhaustive multi-label annotations per image is too cost

- mismatch

- researches refine the validation set with multi-labels

- propose new multi-label evaluation metrics

- 但是造成数据集的mismatch

- we propose

- re-label

- use a strong image classifier trained on extra source of data to generate the multi-labels

- use pixel-wise multi-label predictions before GAP:addtional location-specific supervision

- then trained on re-labeled samples

- further boost with CutMix

- from single to multi-labels:多标签

from global to localized:dense prediction map

- label noise

论点

- single-label

- 和multi-label validation set的mismatch

- random crop augmentation加剧了问题

- 除了多目标还有前背景,只有23%的random crops IOU>0.5

- ideally label

- the full set of classes——multi-label

- where each objects——localized label

- results in a dense pixel labeling $L\in \{0,1\}^{HWC}$

we propose a re-labeling strategy

- ReLabel

- strong classifier

- external training data

- generate feature map predictions

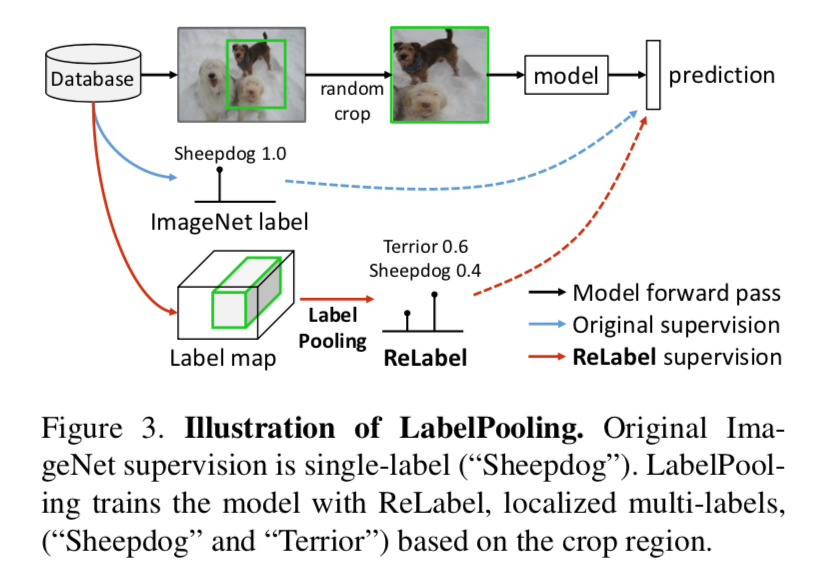

- LabelPooling

- with dense labels & random crop

- pooling the label scores from crop region

- ReLabel

evaluations

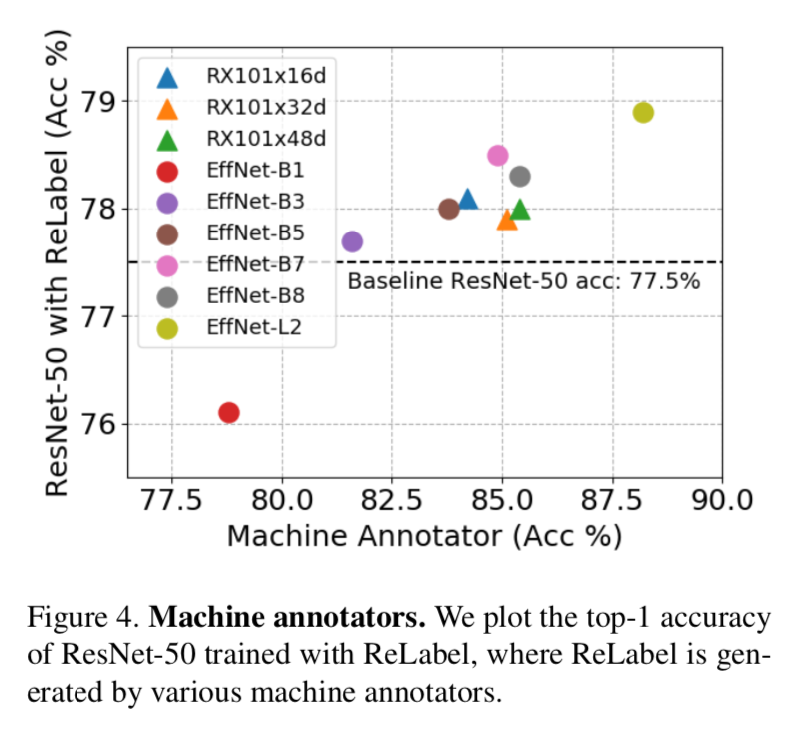

- baseline r50:77.5%

- r50 + ReLabel:78.9%

- r50 + ReLabel + CutMix:80.2%

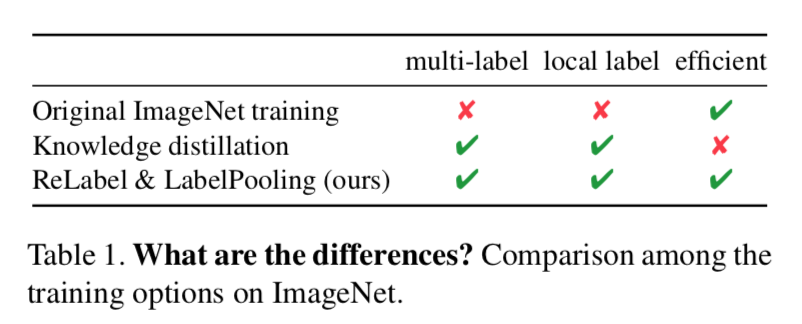

【QUESTION】同样是引入外部数据实现无痛长点,与noisy student的区别/好坏???

目前论文提到的就只有efficiency,ReLabel是one-time cost的,知识蒸馏是iterative&on-the-fly的

- single-label

方法

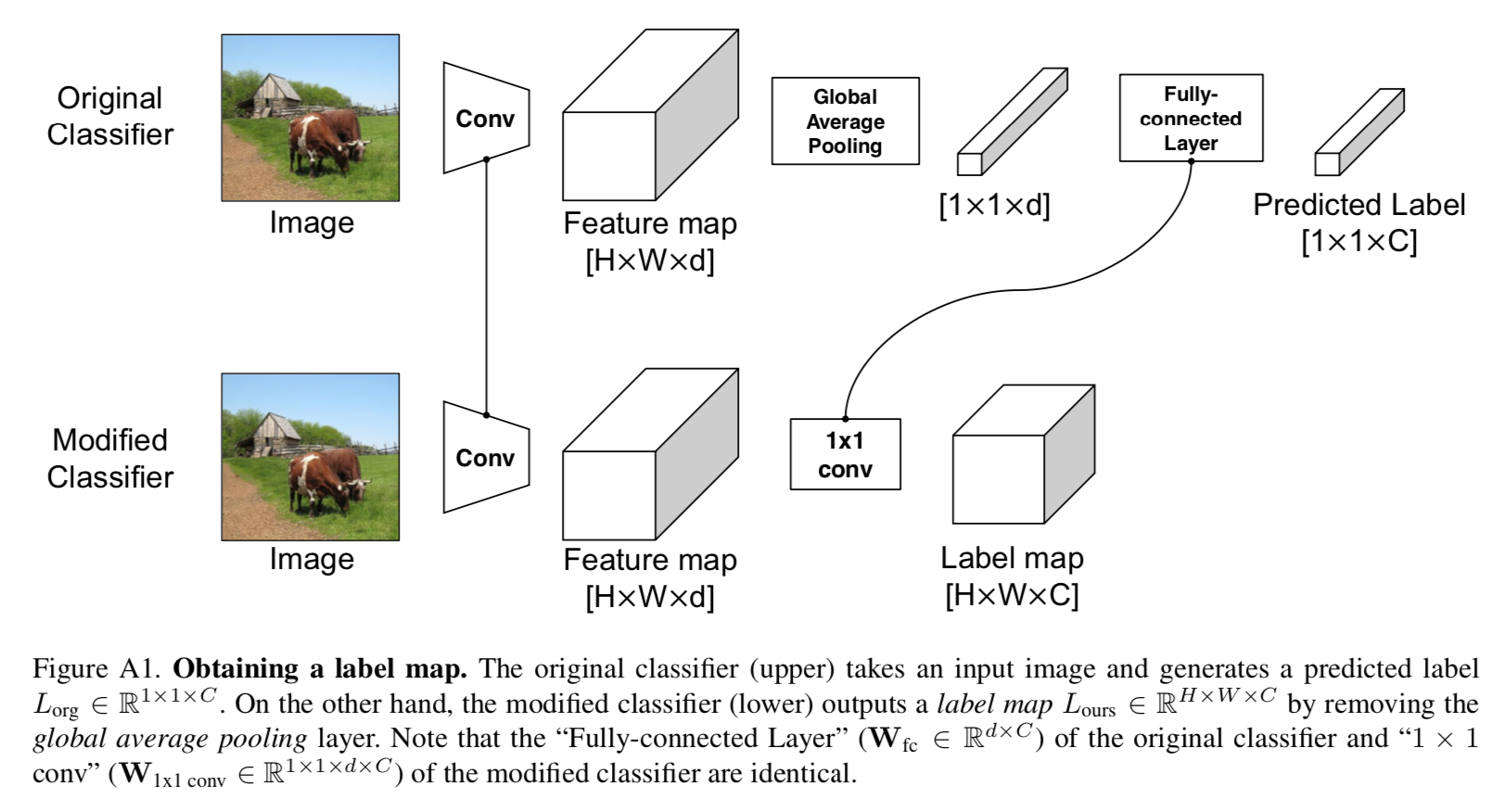

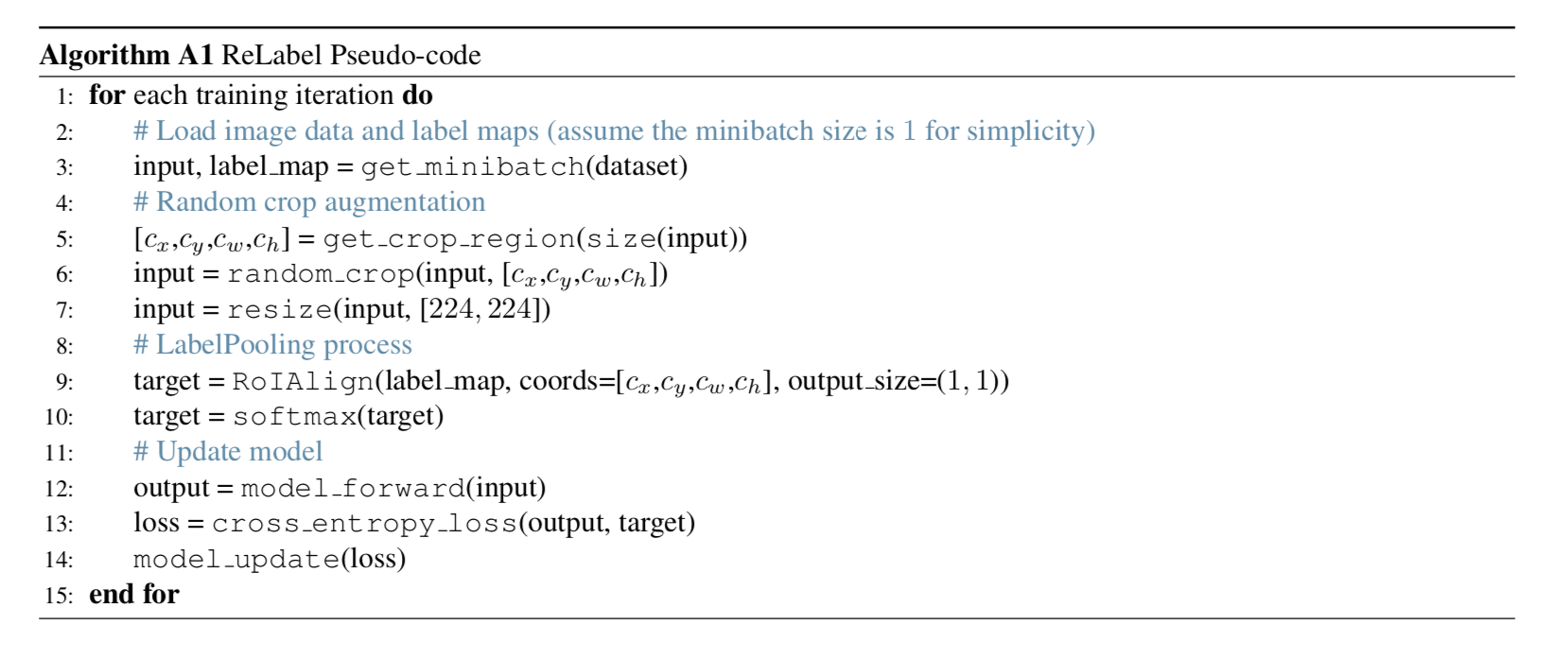

Re-labeling

super annotator

- state-of-the-art classifier

- trained on super large dataset

- fine-tuned on ImageNet

- and predict ImageNet labels

we use open-source trained weights as annotators

- though trained with single-label supervision

- still tend to make multi-label predictions

- EfficientNet-L2

- input size 475

- feature map size 15x15x5504

output dense label size 15x15x1000

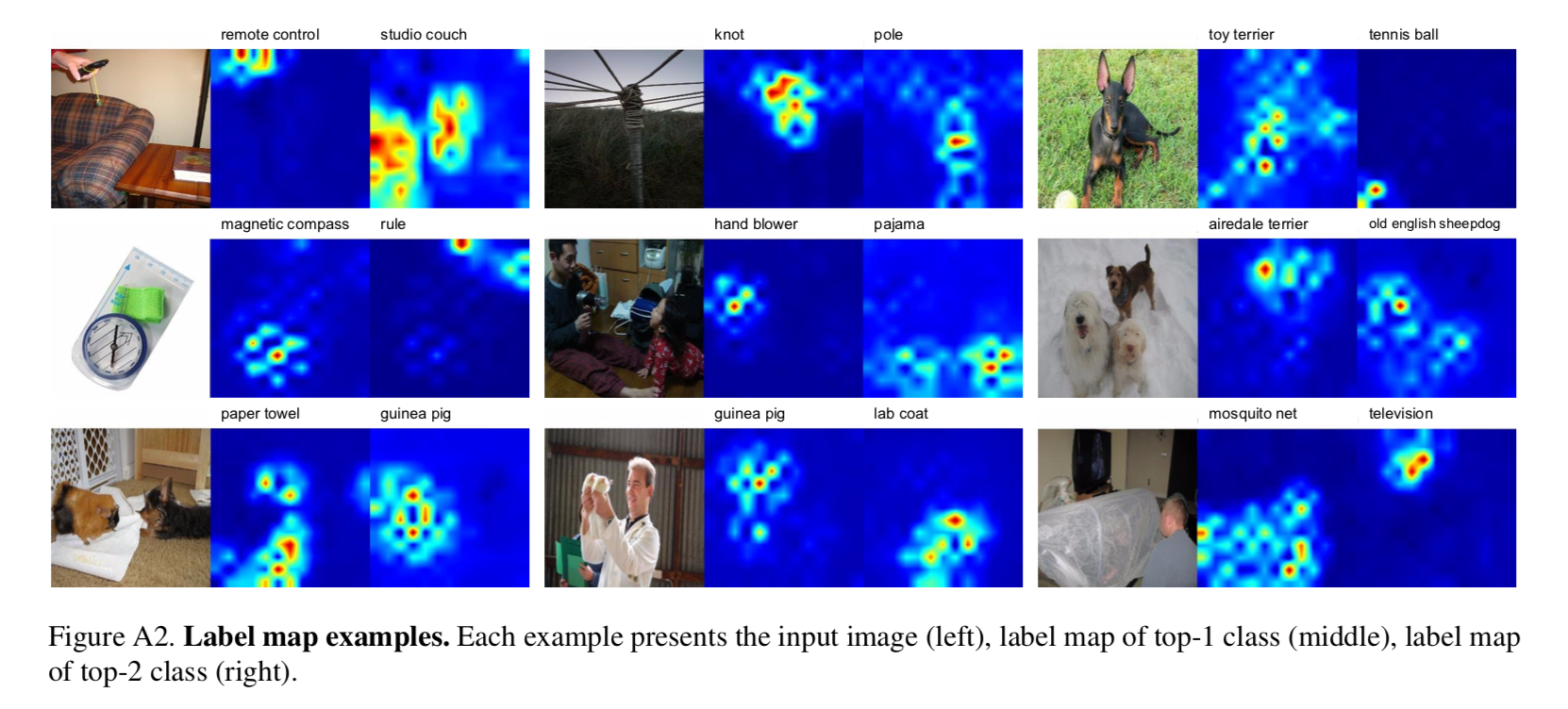

location-specific labels

- remove GAP heads

- add a 1x1 conv

- 说白了就是一个fcn

- original classifier的fc层权重与新添加的1x1 conv层的权重是一样的

label的每个channel对应了一个类别的heatmap,可以看到disjointly located at each object’s position

LabelPooling

- loads the pre-computed label map

- region pooling (RoIAlign) on the label map

- GAP + softmax to get multi-label vector

- train a classifier with the multi-label vector

uses CE

choices

space consumption

- 主要是存储label map的空间

- store only top-5 predictions per image:10GB

time consumption

- 主要是说生成label map的one-shot-inference time和labelPooling引入的额外计算时间

- relabeling:10-GPU hours

- labelPooling:0.5% additiona training time

- more efficient than KD

annotators

标注工具哪家强:目前看下来eff-L2的supervision效果最强

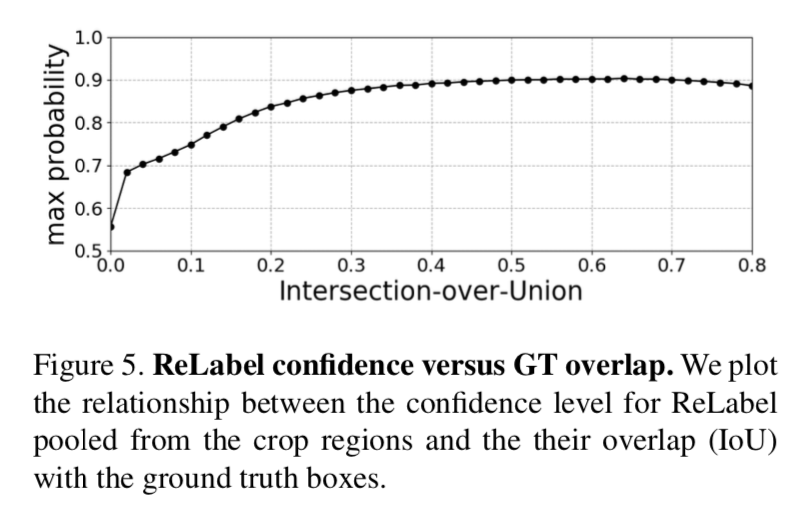

supervision confidence

- 随着image crop与前景物体的IOU增大,confidence逐渐增加

说明supervision provides some uncertainty when low IOU

实验