SpineNet: Learning Scale-Permuted Backbone for Recognition and Localization

动机

- object detection task

- requiring simultaneous recognition and localization

- solely encoder performs not well

- while encoder-decoder architectures are ineffective

propose SpineNet

- scale-permuted intermediate features

- cross-scale connections

- searched by NAS on detection COCO

- can transfer to classification tasks

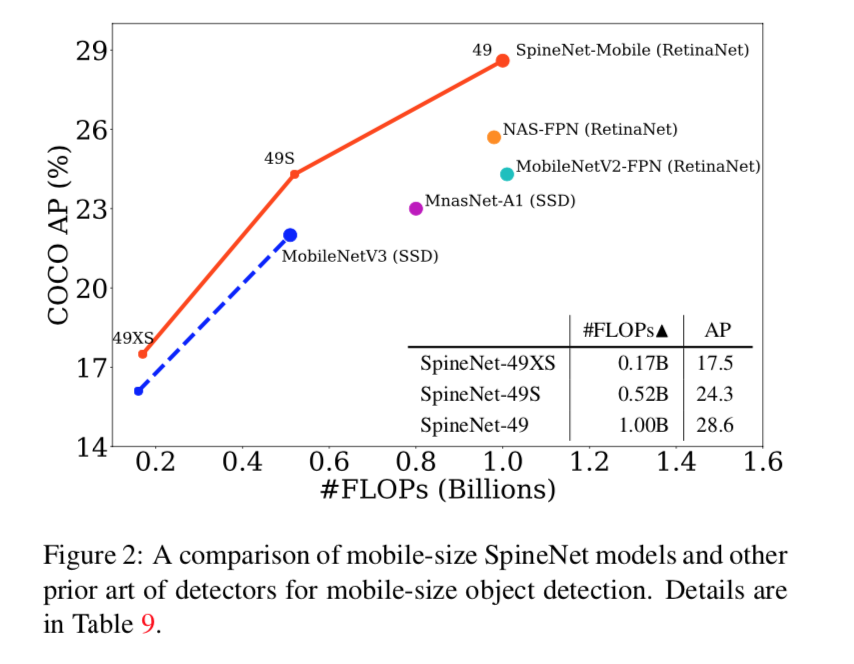

在轻量和重量back的一阶段网络中都涨点领先

- object detection task

论点

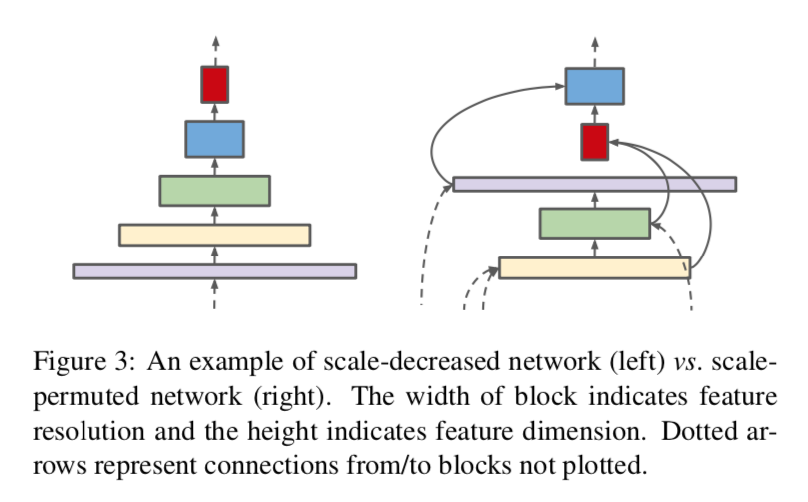

- scale-decreasing backbone

- throws away the spatial information by down-sampling

- challenging to recover

- 接一个轻量的FPN:

- scale-permuted model

- scales of features can increase/decrease anytime:retain the spacial information

- connections go across scales:multi-scale fusion

- searched by NAS

- 是一个完整的FPN,不是encoder-decoder那种可分的形式

- directly connect to classification and bounding box regression subnets

- base on ResNet50

- use bottleneck feature blocks

- two inputs for each feature blocks

- roughly the same computation

- scale-decreasing backbone

方法

formulation

- overall architecture

- stem:scale-decreased architecture

- scale-permuted network

- blocks in the stem network can be candidate inputs for the following scale-permuted network

- scale-permuted network

- building blocks:$B_k$

- feature level:$L_3 - L_7$

- output features:1x1 conv,$P_3 - P_7$

- overall architecture

search space

scale-permuted network:

- block只能从前往后connect

- based on resNet blocks

- channel 256 for $L_5, L_6, L_7$

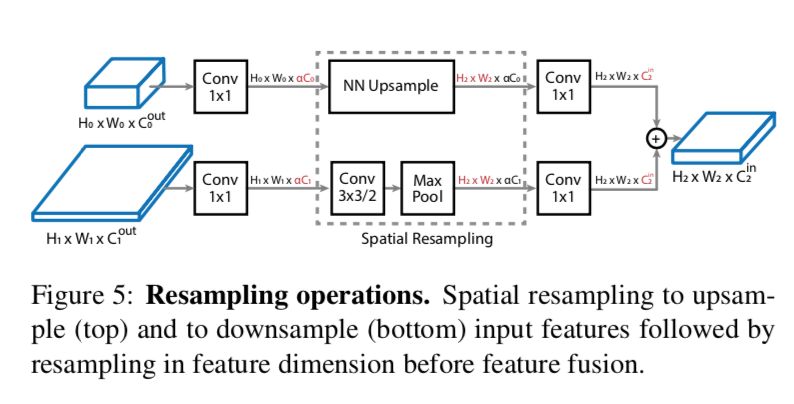

cross-scale connections:

two input connections for each block

from lower ordering block / stem

resampling

- narrow factor $\alpha$:1x1 conv

- 上采样:interpolation

- 下采样:3x3 s2 conv

element-wise add

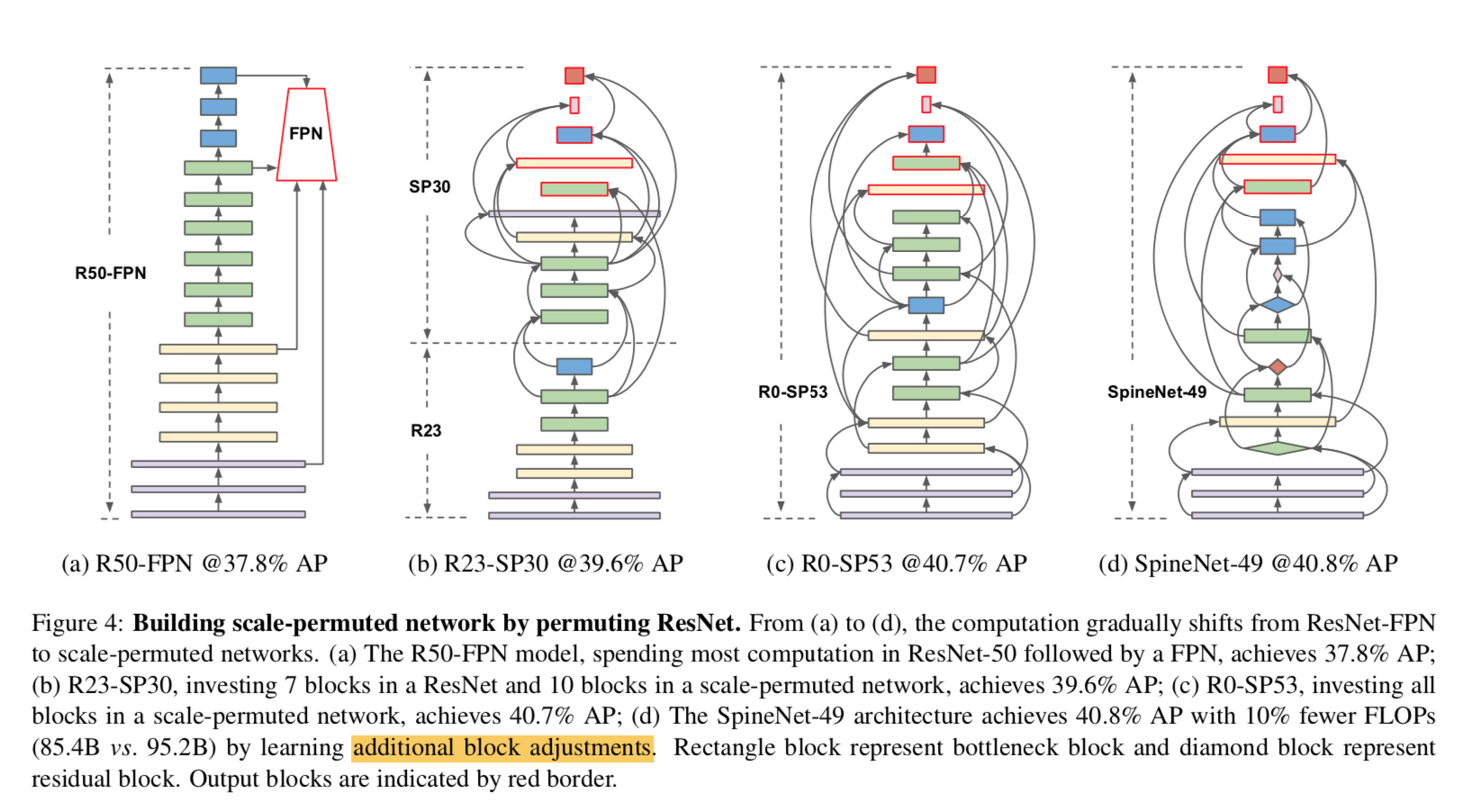

block adjustment

- intermediate blocks can adjust its scale level & type

- level from {-1, 0, 1, 2}

- select from bottleneck / residual block

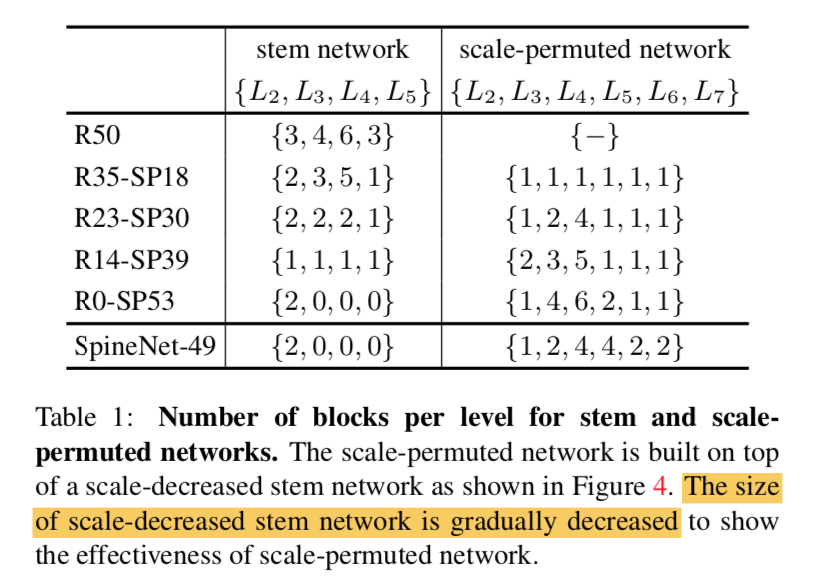

family of models

- R[N] - SP[M]:N feature layers in stem & M feature layers in scale-permuted layers

- gradually shift from stem to SP

with size decreasing

spineNet family

- basic:spineNet-49

- spineNet-49S:channel数scaled down by 0.65

- spineNet-96:double the number of blocks

- spineNet-143:repeat 3 times,fusion narrow factor $\alpha=1$

- spineNet-190:repeat 4 times,fusion narrow factor $\alpha=1$,channel数scaled up by 1.3

实验

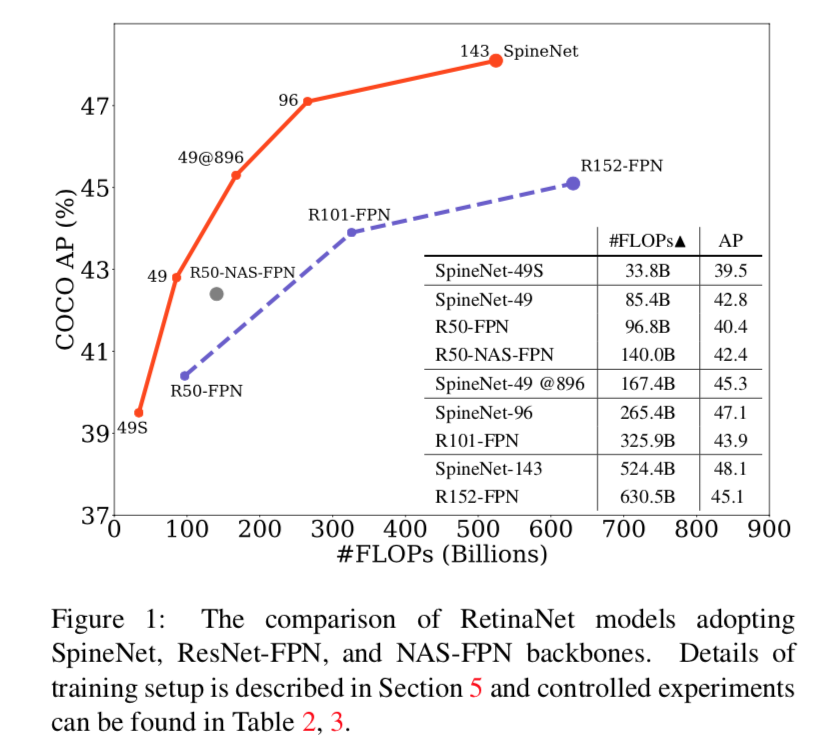

在mid/heavy量级上,比resnet-family-FPN涨出两个点

在light量级上,比mobileNet-family-FPN涨出一个点