CSPNET: A NEW BACKBONE THAT CAN ENHANCE LEARNING CAPABILITY OF CNN

动机

- propose a network from the respect of the variability of the gradients

- reduces computations

- superior accuracy while being lightweightening

论点

CNN architectures design

- ResNeXt:cardinality can be more effective than width and depth

- DenseNet:reuse features

- partial ResNet:high cardinality and sparse connection,the concept of gradient combination

introduce Cross Stage Partial Network (CSPNet)

- strengthening learning ability of a CNN:sufficient accuracy while being lightweightening

- removing computational bottlenecks:hoping evenly distribute the amount of computation at each layer in CNN

reducing memory costs:adopt cross-channel pooling during fpn

方法

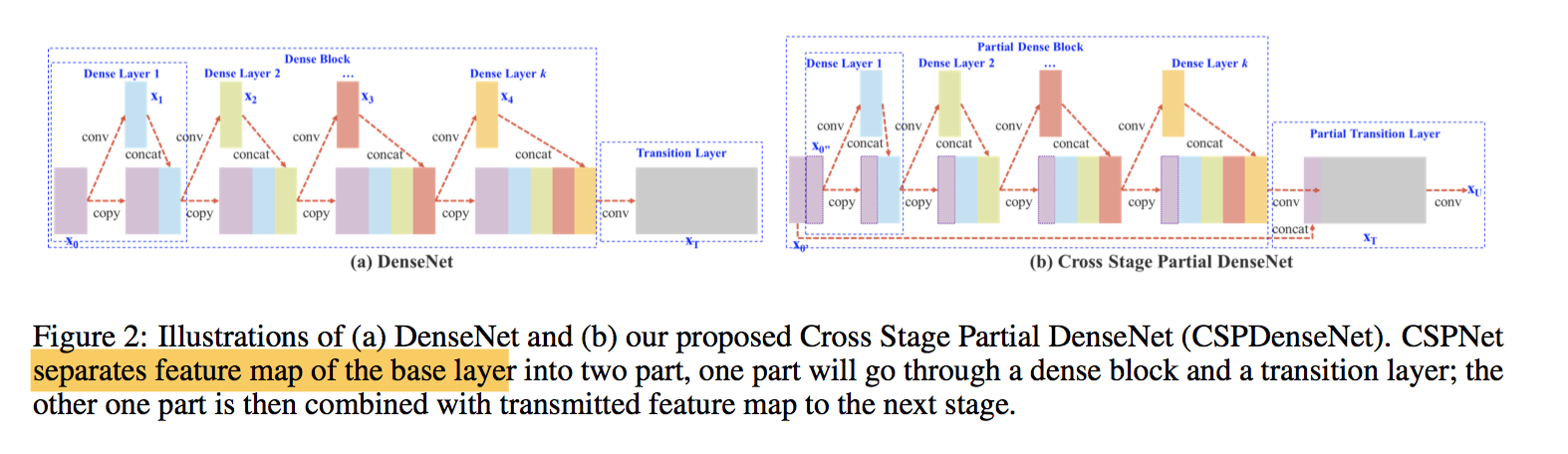

结构

- Partial Dense Block:节省一半计算

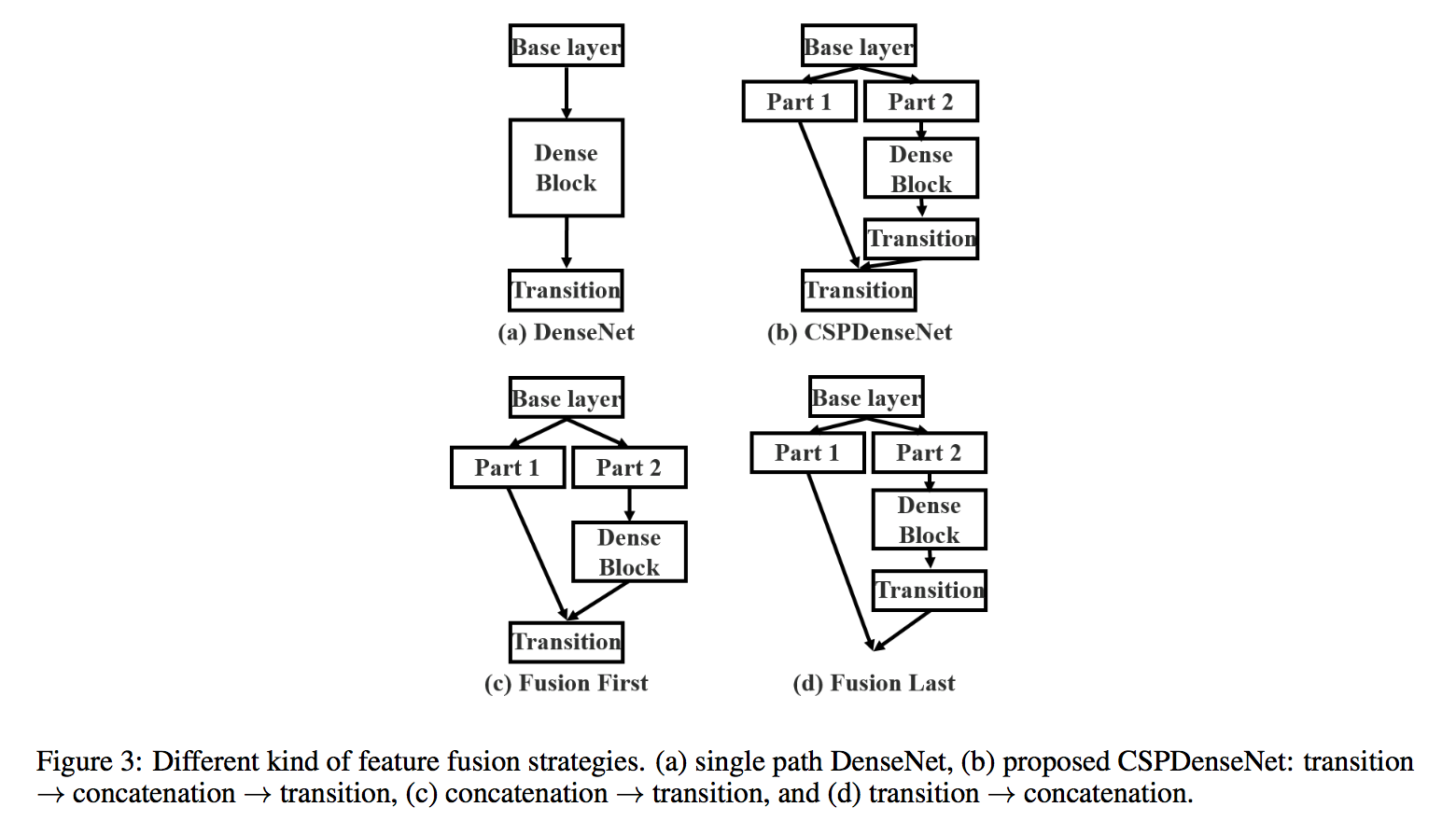

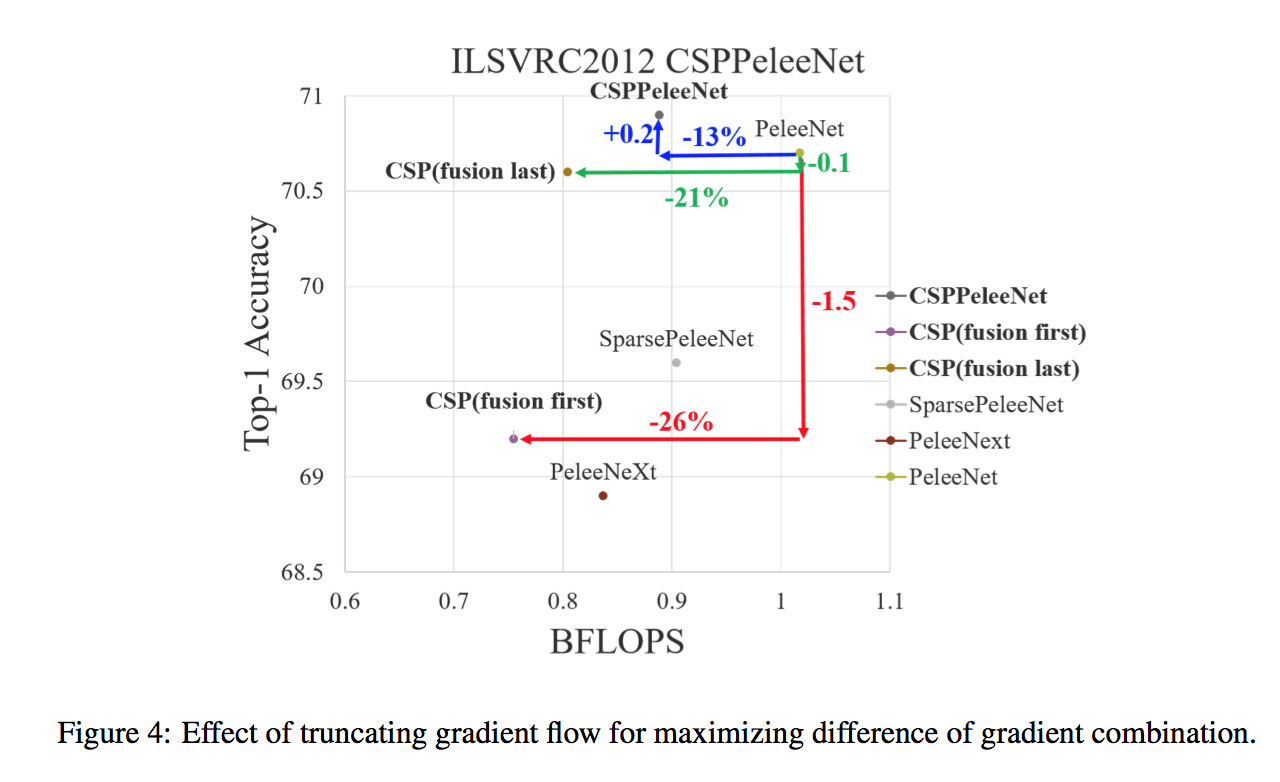

- Partial Transition Layer:fusion last能够save computation同时精度不掉太多

- 论文说fusion first使得大量梯度得到重用,computation cost is significantly dropped,fusion last会损失部分梯度重用,但是精度损失也比较小(0.1)。

- it is obvious that if one can effectively reduce the repeated gradient information, the learning ability of a network will be greatly improved.

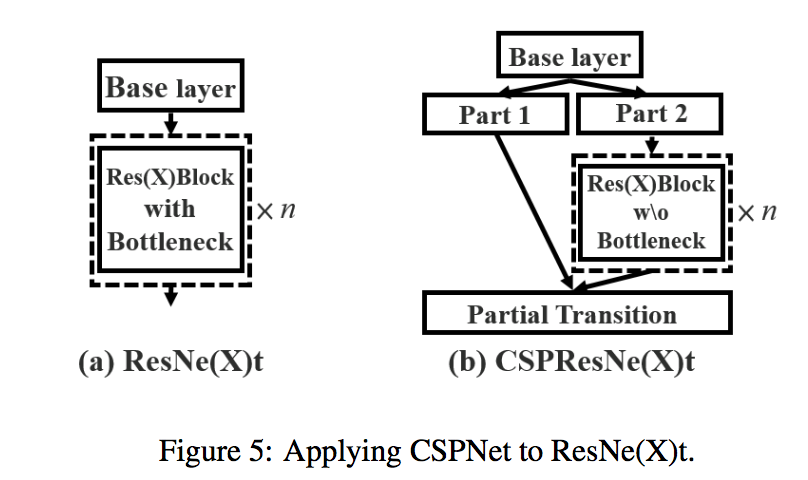

Apply CSPNet to Other Architectures

- 因为只有一半的channel参与resnet block的计算,所以无需再引入bottleneck结构了

最后两个path的输出concat

EFM

fusion

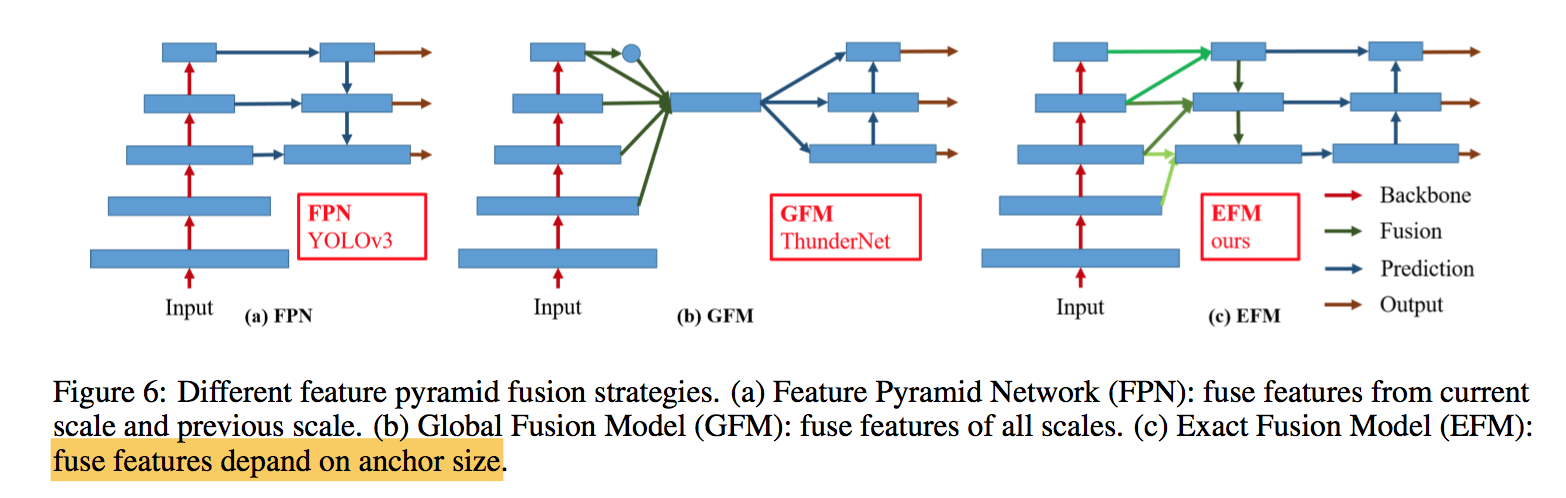

* 特征金字塔(FPN):融合当前尺度和以前尺度的特征。 * 全局融合模型(GFM):融合所有尺度的特征。 * 精确融合模型(EFM):融合anchor尺寸上的特征。- EFM

- assembles features from the three scales:当前尺度&相邻尺度

- 同时又加了一组bottom-up的融合

- Maxout technique对特征映射进行压缩

- EFM

结论

从实验结果来看,

- 分类问题中,使用CSPNet可以降低计算量,但是准确率提升很小;

- 在目标检测问题中,使用CSPNet作为Backbone带来的提升比较大,可以有效增强CNN的学习能力,同时也降低了计算量。本文所提出的EFM比GFM慢2fps,但AP和AP50分别显著提高了2.1%和2.4%。