0. before reading

结合:

triplet loss:考虑类间关系,但计算复杂度高,困难样本难挖掘

center loss:考虑类内关系

TCL:同时增加类内数据的紧实度(compactness)和类间的分离度(separability)

三元组只考虑样本、所属类中心、最近邻类的中心。避免了建立triplets的复杂度和mining hard samples的难度。

title:Triplet-Center Loss for Multi-View 3D Object Retrieval

动机:deep metric learning

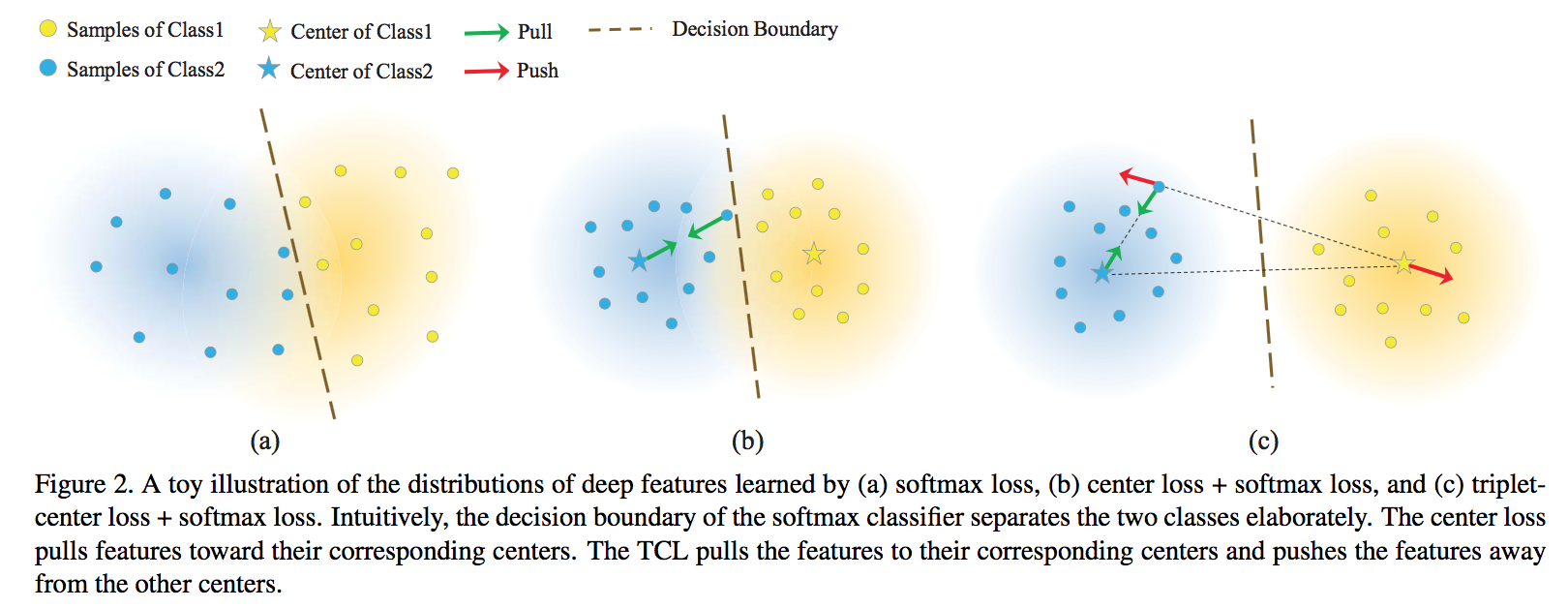

the learned features using softmax loss are not discriminative enough in nature

although samples of the two classes are separated by the decision boundary elaborately, there exists significant intra-class variations

QUESTION1:so what? how does this affect the current task? 动机描述不充分。

QUESTION2:在二维平面上overlap不代表在高维空间中overlap,这种illustration究竟是否有意义。

ANSWER for above:高维空间可分,投影到二维平面不一定可分,但是反过来,二维平面上高度可分,映射会高维空间数据仍旧是高度可分的。只能说,后者能够确保不同类别数据离散性更好,不能说明前者数据离散性不好(如果定义了高维距离,也可以说明)。

应用场景:3D object retrieval

要素:

- learns a center for each class

- requires that the distances between samples and centers from the same class are smaller than those from different classes, in this way the samples are pulled closer to the corresponding center and meanwhile pushed away from the different centers

- both the inter-class separability and the intra-class variations are considered

论点:

Compared with triplet loss, TCL avoids the complex construction of triplets and hard sample mining mechanism.

Compared with center loss, TCL not only considers to reduce the intra-class variations.

QUESTION:what about the comparison with [softmax loss + center loss]?

ANSWER for above:center-loss is actually representing for the joint loss [softmax loss + center loss].

‘’Since the class centers are updated at each iteration based on a mini-batch instead of the whole dataset, which can be very unstable, it has to be under the joint supervision of softmax loss during training. ‘’

本文做法:

- the proposed TCL is used as the supervision loss

- the softmax loss could be also combined in as an addition

细节:

TCL:

前半部分是center-loss,类内欧几里得距离,后半部分是每个样本和与其最近的negative center之间的距离。

‘Unlike center loss, TCL can be used independently from softmax loss. However… ‘

作者解释说,因为center layer是随机初始化出来的,而且是batch updating,因此开始阶段会比较tricky,’while softmax loss could serve as a good guider for seeking better class centers ‘

调参中提到’m is fixed to 5’,说明本文对feature vector没有做normalization(相比之下facenet做了归一化,限定所有embedding分布在高维球面上)。

衡量指标:AUC和MAP,这是一个retrieval任务,最终需要的是embedding,给定Query,召回top matches。

reviews:

- 个人理解:

- softmax分类器旨在数据可分,对于分类边界、feature vector的空间意义不存在一个具象的描述。deep metric learning能够引入这种具象的、图像学的意义,在此基础上,探讨distance、center才有意义。

- 就封闭类数据(类别有限且已知)分类来讲,分类边界有无图像学描述其实意义不大。已知的数据分布尽可能discriminative的主要意义是针对未知类别,我们希望给到模型一个未知数据时,它能够检测出来,而不是划入某个已知类(softmax)。

- TCL的最大贡献应该是想到用center替代样本来进行metric judgement,改善triplet-loss复杂计算量这一问题,后者实际训起来太难了,没有感情的GPU吞噬机器。

- XXX:

- 个人理解:

能够引入这种具象的、图像学的意义,在此基础上,我们探讨distance、center才有意义。